Data annotation: Techniques for different data types

These techniques let AI models to extract meaning from unstructured text, identify objects in images, process spoken language, and analyze video content.

Unlocking the power of AI involves teaching machines to understand our world, and that’s where data annotation comes in. From pictures and text to videos and sensor data, different types of information need unique ways of being ‘taught’ to computers. In this article, we’ll explore data annotation techniques, tailored for different types of data.

What is data annotation?

Data annotation is the process of labeling or tagging data to make it understandable for machines. It’s similar to providing descriptive labels to elements within different types of data—such as images, text, or videos—to enable AI to recognize and comprehend these elements. For instance, in training an AI to identify objects like cats within images, data annotation involves marking or annotating the images to specify where the cats are located, facilitating the computer’s learning process to distinguish and classify them accurately.

This process is fundamental in various AI applications, allowing machines to learn from labeled examples and generalize that learning to new, unseen data. Whether it’s identifying objects in images, recognizing sentiment in text, or segmenting specific areas in medical images, data annotation serves as the foundation for training AI models. It helps machines understand patterns and features within different datasets, paving the way for enhanced accuracy and performance across a wide range of tasks in the AI landscape.

As a practical example, Tesla leverages data annotation through in-house data collection efforts. Tesla vehicles are equipped with cameras and sensors that continuously gather real-world data, including images of traffic scenarios, pedestrian behavior, and road conditions. This data is then annotated by a team of trained professionals who manually label each object or element in the images, providing the AI with the necessary information to understand its environment. Tesla has collected more than 780 miles of driving data, and add another million every 10 hours. All this is done for Tesla cars to “see” the road that they are driving on.

What data annotation methods are used today?

There are a variety of data types, including images, videos, text, and audio. Different data annotation methods are used depending on the specific data type and the desired outcome. Some of the most common data annotation methods include:

1. Image annotation

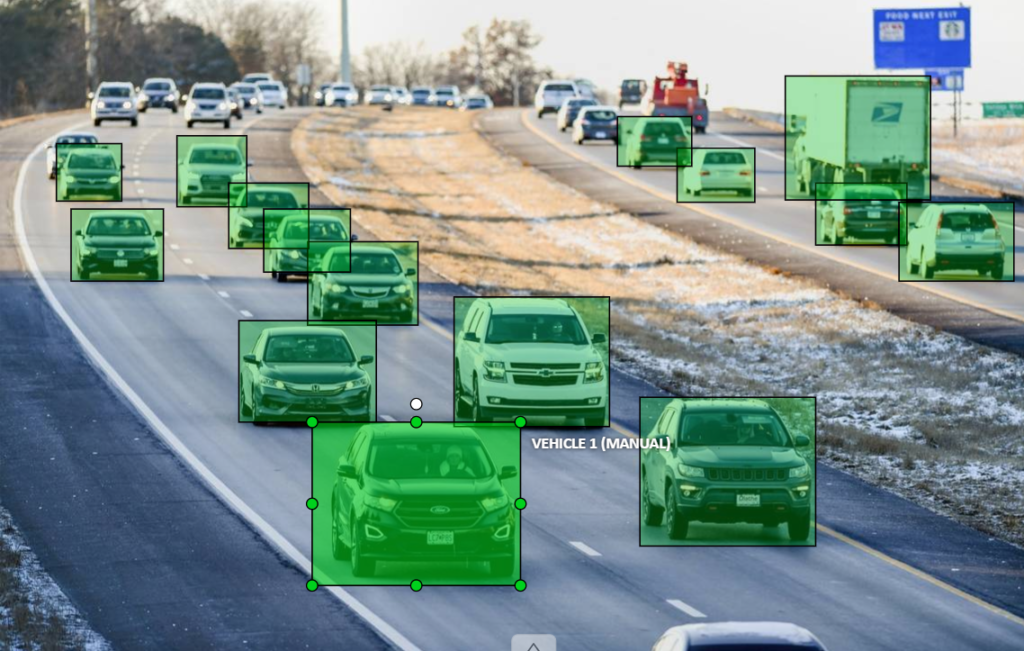

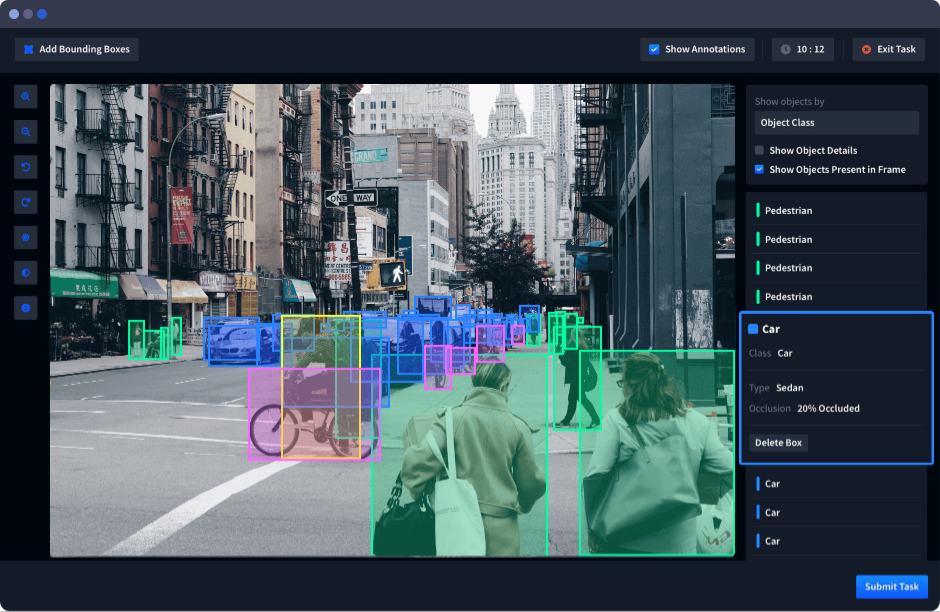

This involves labeling objects, scenes, and actions in images. Common techniques include bounding boxes, semantic segmentation, and landmarking. Self-driving cars rely on image annotation to identify objects like cars, pedestrians, and traffic signs in their surroundings. This information is crucial for the car’s AI system to navigate safely on the roads.

2. Text annotation

This involves labeling text with categories, such as sentiment, intent, or named entities. Common techniques include sentiment analysis, named entity recognition, and part-of-speech tagging. Social media companies use text annotation to identify sentiment, detect spam, and classify content into different categories. This helps them provide a better user experience and filter out inappropriate content.

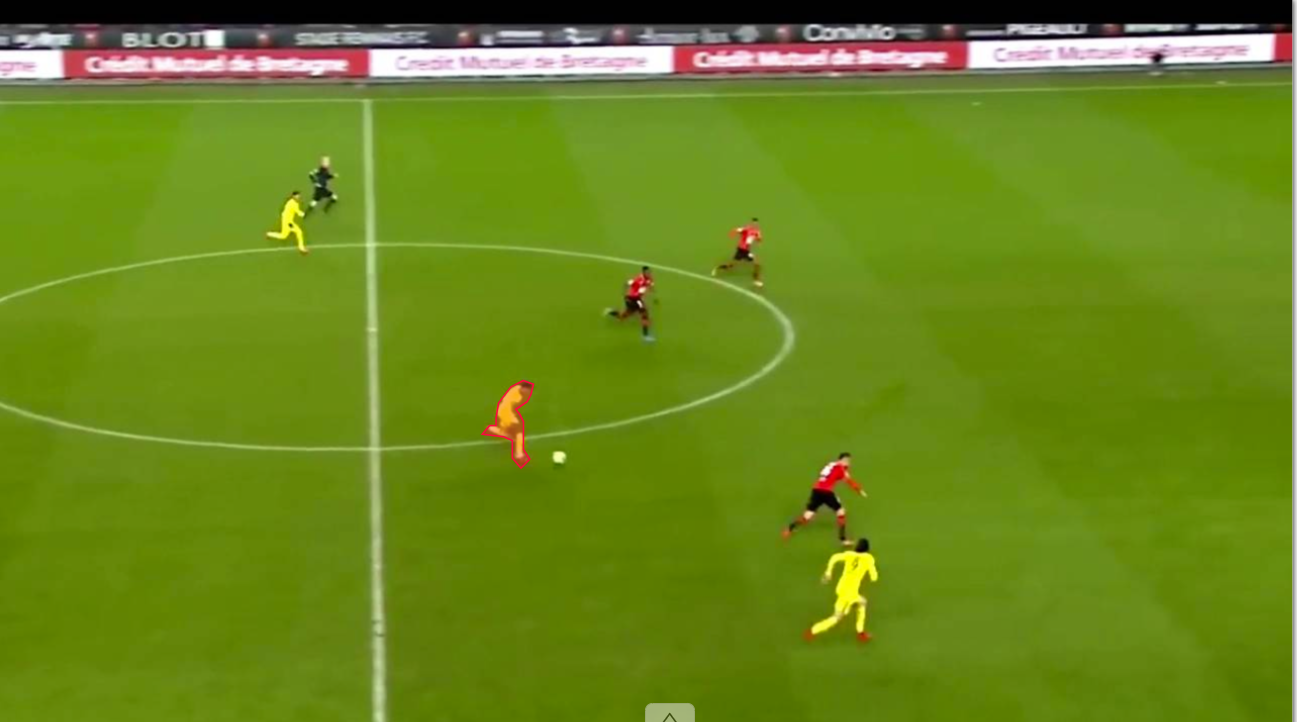

3. Video annotation

This involves labeling objects, scenes, and actions in videos. Common techniques include tracking objects, analyzing events, and identifying key frames. Sports analytics companies use video annotation to track players’ movements, analyze game strategies, and generate performance insights. This information is valuable for coaches and teams to improve their performance.

4. Audio/voice annotation

This involves labeling sounds, such as speech, music, and environmental sounds. Common techniques include speech recognition, speaker identification, and audio event detection. Voice assistants like Alexa and Siri rely on audio annotation to understand user commands. This process involves labeling audio clips with words, phrases, and speaker identification information.

The choice of data annotation method depends on the specific task at hand. For example, if the goal is to train a machine learning model to identify cats in images, then bounding boxes would be an appropriate technique. If the goal is to train a model to transcribe speech, then speech recognition would be a more suitable technique. We will explore the various types of annotation below.

Image Annotation

Data labels in image annotation provide essential information to the models, allowing them to recognize and understand objects, patterns, or features within the images. Image annotation plays a crucial role in various fields, including computer vision, medical imaging, and autonomous systems.

Common Image Annotation Techniques

Bounding Boxes:

Bounding boxes are rectangular shapes drawn around objects in an image. They are used to identify the location and approximate size of objects. This technique is commonly used for object detection tasks, such as identifying cars, pedestrians, or traffic signs in images.

Polygons

Polygons are shapes that can be defined by multiple vertices or points. They are used to outline objects with irregular shapes, such as animals, furniture, or plants. Polygons provide more precise annotations compared to bounding boxes, making them suitable for tasks like object segmentation or instance segmentation.

Semantic Segmentation

Semantic segmentation involves labeling each pixel in an image with a specific class or category. This technique provides a detailed understanding of the image’s content, enabling the model to distinguish between different objects and their relationships. Semantic segmentation is widely used in applications like autonomous driving, medical imaging analysis, and image-based search engines.

3D Cuboids

3D cuboids are used to represent objects in three-dimensional space. They are defined by eight vertices and can be used to annotate objects in images captured from depth sensors or multiple cameras. 3D cuboids are particularly useful for tasks like augmented reality applications and autonomous navigation.

Key Points and Landmarks

Key points and landmarks are used to identify specific points or features on objects. They are often used for tasks like facial recognition, action recognition, and gesture recognition. Key points and landmarks provide more precise information than bounding boxes or polygons, making them suitable for tasks that require detailed understanding of object shapes and features.

Lines and Splines

Lines and splines are used to represent curves or paths in images. They are often used for tasks like lane detection, road markings identification, and tracking object trajectories. Lines and splines provide a more flexible representation of object boundaries compared to bounding boxes or polygons.

An Example Beyond Self-Driving Cars AI: Retail Product Image Annotation

In the retail industry, image annotation plays a crucial role in product categorization, visual search, and automated image tagging. Here are some specific examples of image annotation techniques used in retail:

- Bounding boxes: Used to identify and label individual products within an image, such as clothing items, electronics, or household appliances.

- Polygons: Used to outline irregular product shapes, such as furniture, apparel, or decorative items.

- Semantic segmentation: Used to segment multiple products within an image and assign them to specific categories, such as clothing, footwear, accessories, or home décor.

- Attributes annotation: Used to label products with additional attributes, such as color, size, material, or brand.

- Landmark annotation: Used to identify specific points on products, such as buttons, zippers, or seams, for tasks like product defect detection or style analysis.

By utilizing these image annotation techniques, retail companies can enhance their product search functionality, improve product categorization, and automate image tagging processes, ultimately leading to a more efficient and user-friendly shopping experience.

Text Annotation: Understanding Human Expression

Text annotation is the process of assigning labels or categories to text data, typically used for training machine learning models. These labels provide essential information to the models, enabling them to extract meaning, understand context, and perform tasks like sentiment analysis, intent classification, semantic understanding, and named entity recognition.

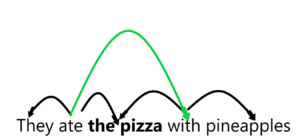

Sentiment Analysis

Sentiment analysis involves identifying the emotional tone or opinion expressed in a piece of text. It is used to classify text as positive, negative, neutral, or mixed. Sentiment analysis is widely used in applications like social media monitoring, customer service analysis, and product review sentiment analysis.

Intent Classification

Intent classification involves identifying the underlying purpose or goal behind a piece of text. It is used to determine the user’s intention in a conversation, such as asking a question, making a request, or providing feedback. Intent classification is commonly used in chatbots, virtual assistants, and customer support systems.

Semantic Understanding

Semantic understanding involves extracting deeper meaning and context from text data. It goes beyond simple word identification to understand the relationships between words, phrases, and entities. Semantic understanding is crucial for tasks like natural language processing, machine translation, and information extraction.

Named Entity Recognition (NER)

Named entity recognition involves identifying and classifying specific types of entities mentioned in text, such as people, organizations, locations, and dates. NER is used in tasks like extracting information from news articles, identifying relevant entities in customer support tickets, and building knowledge graphs.

Examples of Text Annotation Applications

- Social Media Sentiment Analysis: Identifying the overall sentiment of social media posts to understand public opinion about a brand, product, or event.

- Customer Service Intent Classification: Classifying customer inquiries into categories like complaints, requests for information, or feedback, to route them to the appropriate department.

- Medical Document Semantic Understanding: Extracting key information from medical records, such as patient demographics, diagnoses, and treatment plans, for data analysis and clinical decision support.

- News Article Named Entity Recognition: Identifying people, organizations, and locations mentioned in news articles to facilitate information extraction and summarization.

Text annotation is a powerful tool for extracting meaning and insights from vast amounts of text data. By applying these annotation techniques, we can train machine learning models to perform a wide range of tasks, from understanding customer feedback to extracting information from medical records, ultimately leading to improved decision-making, enhanced customer service, and advanced technological applications.

Voice Annotation: Capturing the Essence of Human Speech

Voice annotation, also known as audio annotation, involves labeling and classifying audio data, primarily speech, to train machine learning models for tasks like speech recognition, speaker identification, and audio event detection. By annotating audio clips, we provide valuable information to the models, enabling them to understand the nuances of human speech, identify different speakers, and recognize various sounds within audio recordings.

Common Voice Annotation Techniques

Speech Recognition

Speech recognition involves transcribing spoken words into text. It is used to create voice-to-text applications, such as dictation software and virtual assistants. Voice annotation for speech recognition involves labeling audio clips with the corresponding text transcripts.Speech recognition annotation technique

Speaker Identification

Speaker identification involves identifying the person speaking in an audio recording. It is used in applications like security systems, access control, and forensic analysis. Voice annotation for speaker identification involves labeling audio clips with the corresponding speaker IDs.

Audio Event Detection

Audio event detection involves identifying and classifying specific sounds within an audio recording. It is used in applications like environmental monitoring, surveillance systems, and content moderation. Voice annotation for audio event detection involves labeling audio clips with the corresponding event categories, such as speech, music, laughter, or environmental sounds.

Examples of Voice Annotation Applications

- Speech-to-Text Transcription for Medical Records: Transcribing dictated medical notes by doctors to improve efficiency and reduce errors in patient records.

- Speaker Identification for Secure Access Control: Identifying authorized speakers in voice-based access control systems to enhance security measures in sensitive areas.

- Audio Event Detection for Environmental Monitoring: Detecting specific sounds like animal calls, machinery noise, or water leaks to monitor environmental conditions and identify potential issues.

- Content Moderation for Voice-Based Social Media: Identifying inappropriate content, such as hate speech or offensive language, in voice-based social media platforms to maintain a safe and respectful online community.

Voice annotation plays a crucial role in various industries, from healthcare and finance to security and entertainment. By labeling and classifying audio data, we empower machine learning models to understand the complexities of human speech, enabling a wide range of applications that enhance our daily lives and improve various aspects of our interactions with technology

Video Annotation: Understanding Visual Sequences

Video annotation is the process of labeling and classifying video data to train machine learning models for tasks like object tracking, action recognition, and video summarization. By annotating video frames or segments, we provide essential information to the models, enabling them to understand the temporal nature of videos, identify objects in motion, and recognize patterns or events within video sequences.

Common Video Annotation Techniques

Object Tracking

Object tracking involves tracking the movement of objects over time in a video sequence. It is used in applications like surveillance systems, sports analytics, and autonomous vehicles. Video annotation for object tracking involves labeling the position and bounding box of an object in each frame of the video.

Action Recognition

Action recognition involves identifying and classifying specific actions or events occurring in a video. It is used in applications like video surveillance, human-computer interaction, and anomaly detection. Video annotation for action recognition involves labeling video segments with the corresponding action categories.

Video Summarization

Video summarization involves generating a condensed representation of a video, highlighting the key events or information. It is used in applications like video search, news broadcast summarization, and educational videos. Video annotation for video summarization involves identifying key frames, labeling important events, and extracting relevant text or audio descriptions.

Examples of Video Annotation Applications

- Surveillance System with Object Tracking: Tracking the movement of people and vehicles in surveillance footage to detect suspicious activities or potential threats.

- Sports Analytics with Action Recognition: Analyzing sports footage to identify specific player actions, track ball trajectories, and generate performance insights.

- Self-Driving Cars with Video Annotation: Identifying objects, pedestrians, and traffic signs in real-time video streams to enable safe navigation and decision-making.

- Video Search with Video Summarization: Quickly previewing long video clips by identifying key moments and generating summaries to enhance search efficiency.

- Educational Videos with Annotation: Incorporating annotations, such as text overlays, interactive elements, and voice narration, to improve video comprehension and accessibility.

Video annotation plays a critical role in various fields, from security and entertainment to education and research. By labeling and classifying video data, we empower machine learning models to analyze complex visual sequences, enabling a wide range of applications that enhance our understanding of the world around us and improve the effectiveness of video-based technologies.

In AI, data annotation bridges the gap between raw information and machine comprehension. From meticulously labeling images, texts, videos, and audio to unleashing the potential of these annotations across industries, the power of teaching machines to ‘understand’ our world is realized. As we continually refine and innovate annotation techniques, we pave the way for AI to decipher complexities, fueling progress in technology, healthcare, security, and beyond. Data annotation fuels the models of the future, keeps us moving forward, going to a world where AI can solve complex problems, and changing what we thought was possible.